Consider an example where you want to perform some sentiment analysis on human generated tweets, and you want to classify the tweets are very angry, angry, neutral, happy, and very happy. There are some systems where important English characters like the full-stops, question-marks, exclamation symbols, etc are retained. The general methods of such cleaning involve regular expressions, which can be used to filter out most of the unwanted texts. If we scrap some text from HTML/XML sources, we’ll need to get rid of all the tags, HTML entities, punctuation, non-alphabets, and any other kind of characters which might not be a part of the language.

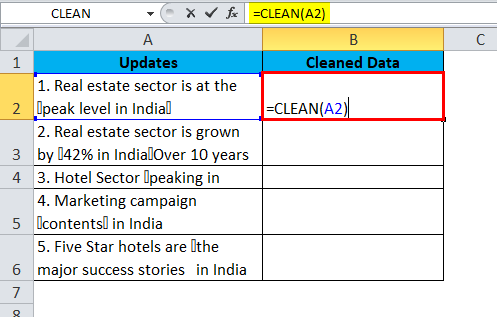

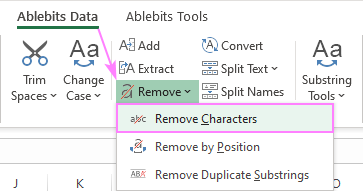

The is a primary step in the process of text cleaning. Main steps of text data cleansing are listed below with explanations: Removing Unwanted Characters

The process of data “cleansing” can vary on the basis of source of the data. But even before that, we need to perform a sequence of operations on the text, so that our text can be “cleaned” out. Vectorization is just a method to convert words into long lists of numbers, which might hold some sort of complex structuring, only to be understood by a computer using some sort of machine learning, or data mining algorithm. Characters like ‘d’, ‘r’, ‘a’, ‘e’ don’t hold any context individually, but when rearranged in the form of a word, they might generate the word “read”, which might explain some activity you’re probably doing right now. One reason behind that is, that in the language models, individual characters don’t have a lot of “context”. But when we usually deal with language modelling, or natural language processing, we are more concerned about the words as a whole, instead of just worrying about character-level depth of our text data. Text is just a sequence of words, or more precisely, a sequence of characters. This article will cover the prequel steps of transforming the text data into some form of vectors, which is more about data cleaning. In my previous article Effective Data Preprocessing and Feature Engineering, I have explained some general process of preprocessing using the three main steps, which are “Transformation into vectors, Normalization, and Dealing with the missing values”. All the sacred texts influencing all the religions, all the compositions of poets and authors, all the scientific explanations by the brightest minds of their times, all the political documents which define our history and our future, and all kind of explicit human communication, these “All”s define the importance of data available in the form of what we call text.

Text is the form of data which has existed for millenniums throughout the human history. Some info-graphics used in this article are also taken from the mentioned books. The content of this article is directly inspired from the books “Deep Learning with Python” by Francois Chollet, and “An Introduction to Information Retrieval” by Manning, Raghavan, and Schütze. Effectively Pre-processing the Text Data Part 1: Text Cleaning

0 kommentar(er)

0 kommentar(er)